Review of Grad-CAM

Published:

Review of Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

GradCAM is a popular class-specific technique to evaluate where a CNN is looking.

GradCAM: a special case of CAM

The paper explains that Grad Cam is a generalization of CAM (class activation mapping) which requires a particular architecture. CAM requires feature maps to directly precede softmax layers, so it is only applicable to a particular kind of CNN architecture performing global average pooling over convolutional maps immediately prior to prediction. Grad CAM can be applied to any of the CNN-based architecture, including those for image captioning and visual question answering. For a fully convolutional architecture, Grad CAM reduces to CAM. The above generalization also allows us to generate visual explanations from CNN-based models that cascade convolutional layers with much more complex interactions.

Approach:

The paper describes Grad-CAM using the gradient information flowing into the last convolutional layer of the CNN to understand the importance of each neuron for a decision of interest. For a given image and a class of interest as input, forward propagating the image through the CNN part of the model and then through task-specific computations, a raw score for the category can be obtained. The gradients are set to zero for all classes except for the desired or input class, which is set to 1. This signal is then backpropagated to the rectified convolutional feature maps of interest, which we combine to compute the coarse Grad-CAM localization which represents where the model has to look to make the particular decision.

Guided Grad Cam

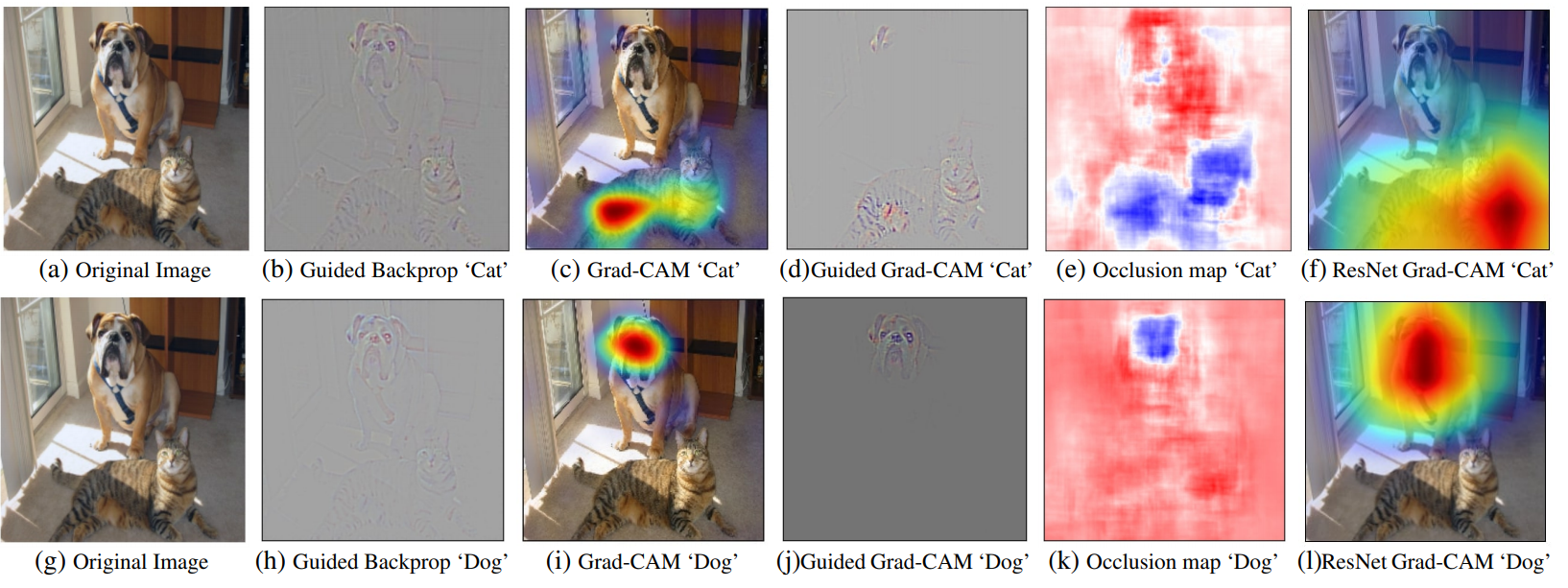

The paper then introduces Guided Grad-CAM by describing a shortcoming of Grad-CAM. Grad-CAM visualizations are class-discriminative and localize relevant image regions well, but they lack the ability to show fine-grained visualization. Guided Grad Cam is an element-wise product of GradCAM with guided backpropagation which helps with this. In Guided backpropagation, we set tall the negative gradients to 0 as are only interested in what image features the neuron detects, not in what kind of stuff it doesn’t detect. [link]

Localization:

For evaluating localization, the GRAD-CAM maps for each of the predicted classes are generated and thresholded followed by drawing a bounding box around the single largest segment. Similar to classification, evaluation is performed on the top-1 and top-5 categories.

Explainability vs interpretability

The paper then talks about “explainability” vs “interpretability”. Explainability indicates if it is possible to explain how a model made its decision, although the explanation is not guaranteed to make sense to all humans. For instance, the model may explain a boat classification by looking at the blue water. The explanation is not guaranteed to be free of biases. Interpretability means that a model’s parameters can be intuitively understood - for instance, weights of logistic regression signify the importance of features.

Grad CAM explains what a trained CNN does and it does not make the model interpretable. While the Grad-CAMS heatmap makes sense, it must be used with caution on mission-critical applications.

Discussion

Overall, GRAD CAM is useful to debug and find out about the explainability of a given model. For any application with sensitive information, it might be inappropriate to use GradCAM or any attention visualization mechanism depending on how it is going to be used. Vanilla Grad-CAM method is also a great option to use as it doesn’t depend on the guided backpropagation. Recent works suggest that Guided backprop is performing partial image recovery and acting as an edge detector, rather than providing insight into a trained model.